AI isn’t just robots: How to talk to young children about AI

Young children have a unique perspective on the world they live in. They often seem oblivious to what’s going on around them, but then they will ask a question that makes you realise they did get some insight from a news story or a conversation they overheard. This happened to me with a class of ten-year-olds when one boy asked, with complete sincerity and curiosity, “And is that when the zombie apocalypse happened?” He had unknowingly conflated the Great Plague with television depictions of zombies taking over the world.

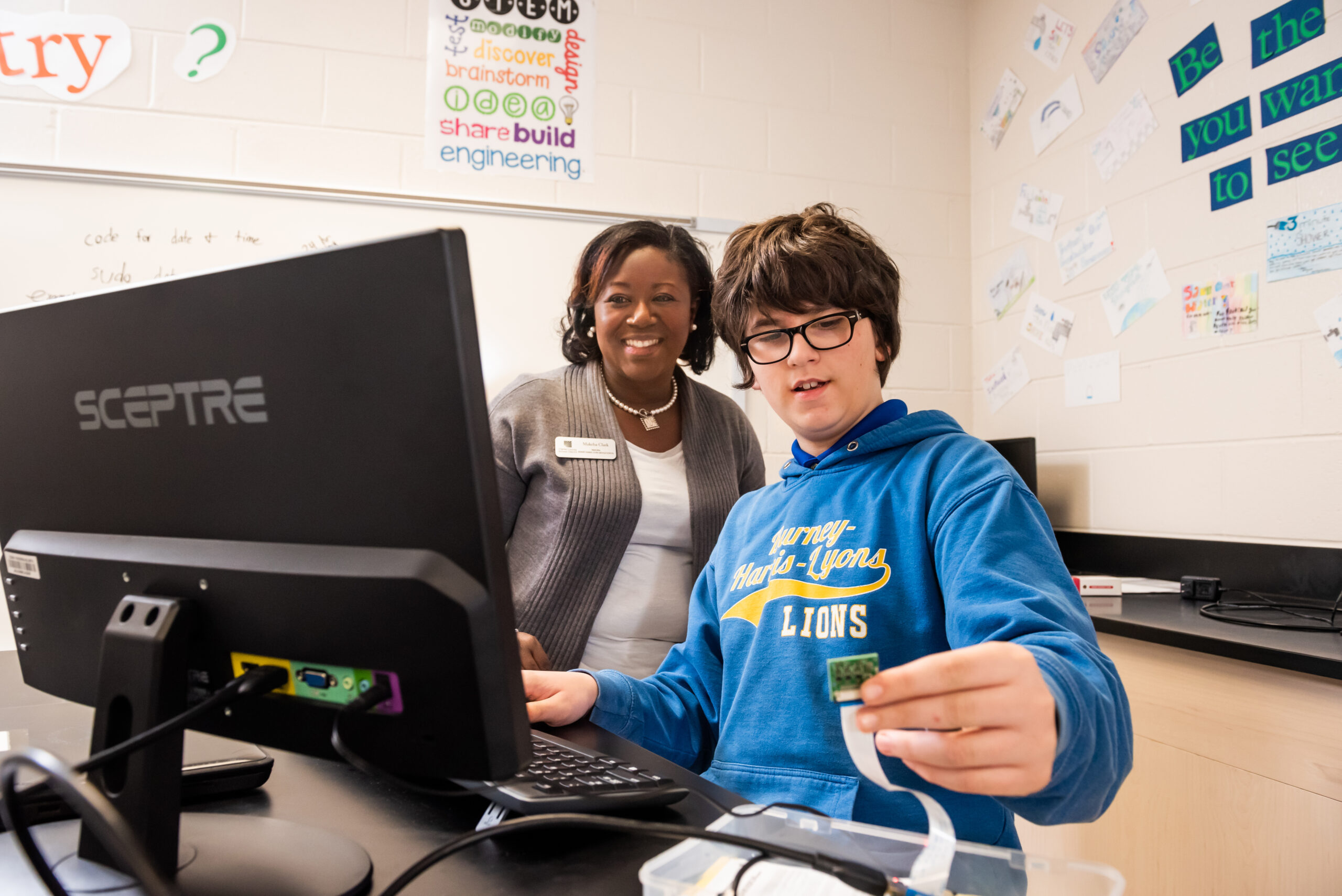

How to talk to children about AI

Absorbing media and assimilating it into your existing knowledge is a challenge, and this is a concern when the media is full of big, scary headlines about artificial intelligence (AI) taking over the world, stealing jobs, and being sentient. As teachers and parents, you don’t need to know all the details about AI to answer young people’s questions, but you can avoid accidentally introducing alternate conceptions. This article offers some top tips to help you point those inquisitive minds in the right direction.

AI is not a person

Technology companies like to anthropomorphise their products and give them friendly names. Why? Because it makes their products seem more endearing and less scary, and makes you more likely to include them in your lives. However, when you think of AI as a human with a name who needs you to say ‘please’ or is ‘there to help you’, you start to make presumptions about how it works, what it ‘knows’, and its morality. This changes what we ask, how much we trust an AI device’s responses, and how we behave when using the device. The device, though, does not ‘see’ or ‘know’ anything; instead, it uses lots of data to make predictions. Think of word association: if I say “bread”, I predict that a lot of people in the UK will think “butter”. Here, I’ve used the data I’ve collected from years of living in this country to predict a reasonable answer. This is all AI devices are doing.

[AI] does not ‘see’ or ‘know’ anything; instead, it uses lots of data to make predictions.

When talking to young children about AI, try to avoid using pronouns such as ‘she’ or ‘he’. Where possible, avoid giving devices human names, and instead call them “computer”, to reinforce the idea that humans and computers are very different. Let’s imagine that a child in your class says, “Alexa told me a joke at the weekend — she’s funny!” You could respond, “I love using computers to find new jokes! What was it?” This is just a micro-conversation, but with it, you are helping to surreptitiously challenge the child’s perception of Alexa and the role of AI in it.

Where possible, avoid giving devices human names, and instead call them ‘computer’, to reinforce the idea that humans and computers are very different.

Another good approach is to remember to keep your emotions separate from computers, so as not to give them human-like characteristics: don’t say that the computer ‘hates’ you, or is ‘deliberately ignoring’ you, and remember that it’s only ‘helpful’ because it was told to be. Language is important, and we need to continually practise avoiding anthropomorphism.

AI isn’t just robots (actually, it rarely is)

The media plays a huge role in what we imagine when we talk about AI. For the media, the challenge is how to make lines of code and data inside a computer look exciting and recognisable to their audiences. The answer? Robots! When learners hear about AI taking over the world, it’s easy for them to imagine robots like those you’d find in a Marvel movie. Yet the majority of AI exists within systems they’re already aware of and are using — you might just need to help draw their attention to it.

Even better than just calling out uses of AI: try to have conversations about when things go wrong and AI systems suggest silly options.

For example, when using a word processor, you can highlight to learners that the software sometimes predicts what word you want to type next, and that this is an example of the computer using AI. When learners are using streaming services for music or TV and the service predicts something that they might want to watch or listen to next, point out that this is using AI technology. When they see their parents planning a route using a satnav, explain that the satnav system uses data and AI to plan the best route.

Even better than just calling out uses of AI: try to have conversations about when things go wrong and AI systems suggest silly options. This is a great way to build young people’s critical thinking around the use of computers. AI systems don’t always know best, because they’re just making predictions, and predictions can always be wrong.

AI complements humans

There’s a delicate balance between acknowledging the limitations of AI and portraying it as a problematic tool that we shouldn’t use. AI offers us great opportunities to improve the way we work, to get us started on a creative project, or to complete mundane tasks. However, it is just a tool, and tools complement the range of skills that humans already have. For example, if you gave an AI chatbot app the prompt, ‘Write a setting description using these four phrases: dark, scary, forest, fairy tale’, the first output from the app probably wouldn’t make much sense. As a human, though, you’d probably have to do far less work to edit the output than if you had had to write the setting description from scratch. Now, say you had the perfect example of a setting description, but you wanted 29 more examples, a different version for each learner in your class. This is where AI can help: completing a repetitive task and saving time for humans.

To help children understand how AI and humans complement each other, ask them the question, ‘What can’t a computer do?’ Answers that I have received before include, ‘Give me a hug’, ‘Make me laugh’, and ‘Paint a picture’, and these are all true. Can Alexa tell you a joke that makes you laugh? Yes — but a human created that joke. The computer is just the way in which it is being shared. Even with AI ‘creating’ new artwork, it is really only using data from something that someone else created. Humans are required.

Overall, we must remember that young children are part of a world that uses AI, and that it is likely to be ever more present in the future. We need to ensure that they know how to use AI responsibly, by minimising their alternate conceptions. With our youngest learners, this means taking care with the language you choose and the examples you use, and explaining AI’s role as a tool.

To help children understand how AI and humans complement each other, ask them the question, ‘What can’t a computer do?’

These simple approaches are the first steps to empowering children to go on to harness this technology. They also pave the way for you to simply introduce the core concepts of AI in later computing lessons without first having to untangle a web of alternate conceptions.

This article also appears in issue 22 of Hello World, which is all about teaching and AI. Download your free PDF copy now.

If you’re an educator, you can use our free Experience AI Lessons to teach your learners the basics of how AI works, whatever your subject area.

3 comments

wess trabelsi

“Can Alexa tell you a joke that makes you laugh? Yes — but a human created that joke. The computer is just the way in which it is being shared. Even with AI ‘creating’ new artwork, it is really only using data from something that someone else created.”

how many humans actually “create jokes” compared to the number of people who hear jokes and then tell them again just like Alexa? Same for artwork, no humans ever create artwork ex nihilo, the tools used have been designed by someone else, and countless influences are part of the artist’s inspiration, how is this different? John Coltrane did not invent the saxophone or the major and minor scales. That’s what “standing on the shoulders of giants” mean.

Raspberry Pi Staff Jan Ander

I think the point the blog makes is that it’s important to highlight to children that computers have no understanding of what their inputs or outputs are. For example, an Alexa can tell jokes when you instruct it to do so, but an Alexa can’t find jokes funny.

And you’re right, people don’t create jokes or art from nothing. But for people, the process of creating a joke or an artwork is very different to that of an AI system. For people, it involves understanding and intention, and it’s an act of communication. People have a sense of acting in the world. AI systems don’t.

Salekin

I may be wrong but we need to control how AI actually would shape next generation. One group may overly dependent on AI day to day. It would make them use less of their talents and hinder potentials, on the other hand another group will work behind the AI to make mediums and application that would make few peoples life easier and make few dumber. Just my personal overview.