-

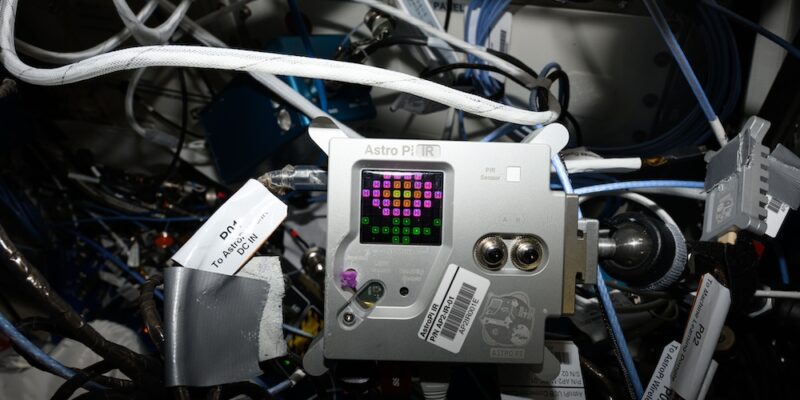

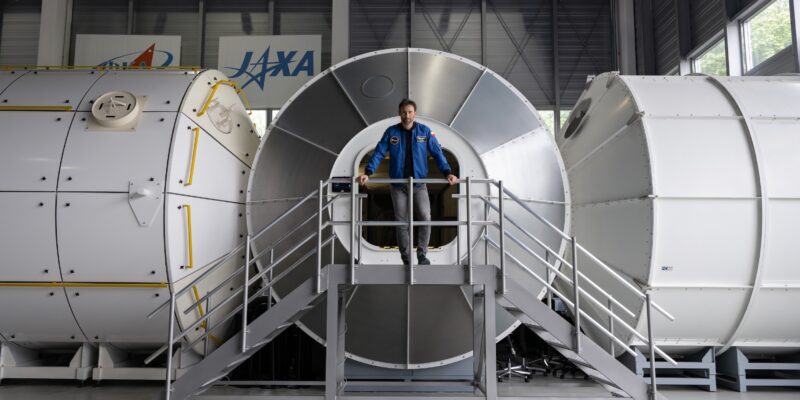

The European Astro Pi Challenge 2024/25 launches today

Sending young people's code to space, with ESA project astronaut Sławosz Uznański as ambassador

-

Experience AI: How research continues to shape the resources

A look into the thinking behind our AI literacy materials

-

Adapting primary Computing resources for cultural responsiveness: Bringing in learners’ identity

How teachers tailor lessons to their students' cultural backgrounds

-

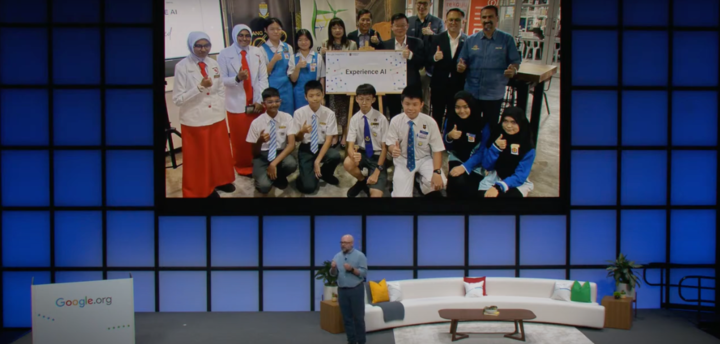

Experience AI at UNESCO’s Digital Learning Week

We attended UNESCO’s Digital Learning Week conference to present our free Experience AI resources

-

Experience AI expands to reach over 2 million students

We'll soon welcome new partners in 17 countries across Europe, the Middle East, and Africa

-

Join the UK Bebras Challenge 2024

The nation's largest computing competition is back and open for entries from schools

-

Bridging the gap from Scratch to Python: Introducing ‘Paint with Python’

A new activity to support young people as they learn to code Python for the first time

-

Get ready for Moonhack 2024: Projects on climate change

A free, international coding challenge for young people

-

Celebrating the community: Isabel

Meet Isabel, a computer science teacher who empowers a new generation of diverse tech professionals

-

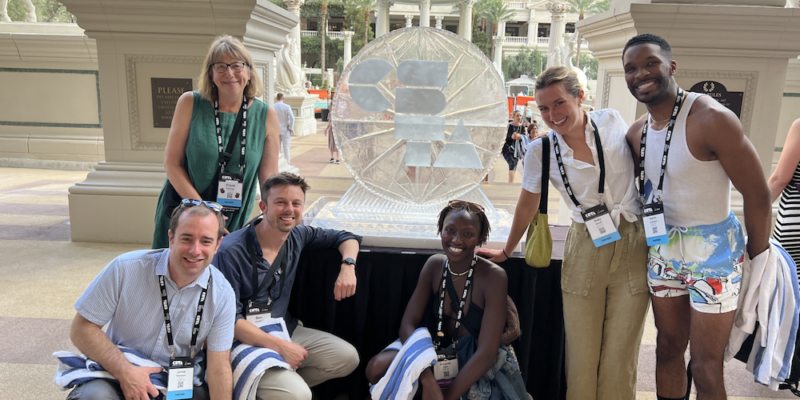

CSTA 2024: What happened in Las Vegas

An excellent opportunity for us to connect with and learn from educators

-

Why we’re taking a problem-first approach to the development of AI systems

Ben Garside explains how we can help learners understand GenAI by focusing on solving problems

-

Celebrating Astro Pi 2024

Mission Zero and Mission Space Lab give young people the confidence to engage with technology