Can algorithms be unethical?

At Raspberry Pi, we’re interested in all things to do with technology, from building new tools and helping people teach computing, to researching how young people learn to create with technology and thinking about the role tech plays in our lives and society. One of the aspects of technology I myself have been thinking about recently is algorithms.

Technology impacts our lives at the level of privacy, culture, law, environment, and ethics.

All kinds of algorithms — set series of repeatable steps that computers follow to perform a task — are running in the background of our lives. Some we recognise and interact with every day, such as online search engines or navigation systems; others operate unseen and are rarely directly experienced. We let algorithms make decisions that impact our lives in both large and small ways. As such, I think we need to consider the ethics behind them.

We need to talk about ethics

Ethics are rules of conduct that are recognised as acceptable or good by society. It’s easier to discuss the ethics of a specific algorithm than to talk about ethics of algorithms as a whole. Nevertheless, it is important that we have these conversations, especially because people often see computers as ‘magic boxes’: you push a button and something magically comes out of the box, without any possibility of human influence over what that output is. This view puts power solely in the hands of the creators of the computing technology you’re using, and it isn’t guaranteed that these people have your best interests at heart or are motivated to behave ethically when designing the technology.

Who creates the algorithms you use, and what are their motivations?

You should be critical of the output algorithms deliver to you, and if you have questions about possible flaws in an algorithm, you should not discount these as mere worries. Such questions could include:

- Algorithms that make decisions have to use data to inform their choices. Are the data sets they use to make these decisions ethical and reliable?

- Running an algorithm time and time again means applying the same approach time and time again. When dealing with societal problems, is there a single approach that will work successfully every time?

Below, I give two concrete examples to show where ethics come into the creation and use of algorithms. If you know other examples (or counter-examples, feel free to disagree with me), please share them in the comments.

Algorithms can be biased

Part of the ‘magic box’ mental model is the idea that computers are cold instructions followers that cannot think for themselves — so how can they be biased?

Humans aren’t born biased: we learn biases alongside everything else, as we watch the way our family and other people close to us interact with the world. Algorithms acquire biases in the same way: the developers who create them might inadvertently add their own biases.

Humans can be biased, and therefore the algorithms they create can be biased too.

An example of this is a gang violence data analysis tool that the Met Police in London launched in 2012. Called the gang matrix, the tool held the personal information of over 300 individuals. 72% of the individuals on the matrix were non-white, and some had never committed a violent crime. In response to this, Amnesty International filed a complaint stating that the makeup of the gang matrix was influenced by police officers disproportionately labelling crimes committed by non-white individuals as gang-related.

Who curates the content we consume?

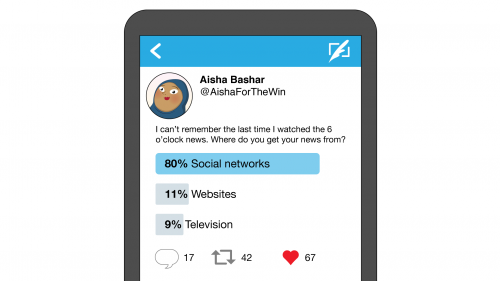

We live in a content-rich society: there is much, much more online content than one person could possibly take in. Almost every piece of content we consume is selected by algorithms; the music you listen to, the videos you watch, the articles you read, and even the products you buy.

Some of you may have experienced a week in January of 2012 in which you saw a lot of either cute kittens or sad images on Facebook; if so, you may have been involved in a global social experiment that Facebook engineers performed on 600,000 of its users without their consent. Some of these users were shown overwhelmingly positive content, and others overwhelmingly negative content. The Facebook engineers monitored the users’ actions to gage how they responded. Was this experiment ethical?

In order to select content that is attractive to you, content algorithms observe the choices you make and the content you consume. The most effective algorithms give you more of the same content, with slight variation. How does this impact our beliefs and views? How do we broaden our horizons?

Why trust algorithms at all then?

People generally don’t like making decisions; almost everyone knows the discomfort of indecision. In addition, emotions have a huge effect on the decisions humans make moment to moment. Algorithms on the other hand aren’t impacted by emotions, and they can’t be indecisive.

While algorithms are not immune to bias, in general they are way less susceptible to it than humans. And if a bias is identified in an algorithm, an engineer can remove the bias by editing the algorithm or changing the dataset the algorithm uses. The same cannot be said for human biases, which are often deeply ingrained and widespread in society.

As is true for all technology, algorithms can create new problems as well as solve existing problems.

That’s why there are more and less appropriate areas for algorithms to operate in. For example, using algorithms in policing is almost always a bad idea, as the data involved is recorded by humans and is very subjective. In objective, data-driven fields, on the other hand, algorithms have been employed very successfully, such as diagnostic algorithms in medicine.

Algorithms in your life

I would love to hear what you think: this conversation requires as many views as possible to be productive. Share your thoughts on the topic in the comments! Here are some more questions to get you thinking:

- What algorithms do you interact with every day?

- How large are the decisions you allow algorithms to make?

- Are there algorithms you absolutely do not trust?

- What do you think would happen if we let algorithms decide everything?

Feel free to respond to other people’s comments and discuss the points they raise.

The ethics of algorithms is one of the topics for which we offer you a discussion forum on our free online course Impact of Technology. The course also covers how to facilitate classroom discussions about technology — if you’re an educator teaching computing or computer science, it is a great resource for you!

The Impact of Technology online course is one of many courses developed by us with support from Google.

22 comments

Will

Algorithms tend to rely upon a categorical approach. However, life is rather more flexible. Questions that offer a small number of categories as answers may fail as these categories don’t cover all cases. Expanding the question to accommodate all of the real-world complexity may vastly increase algorithmic complexity. Perhaps it may be better to determine the level of complexity necessary to achieve an adequate goal? What would be the cost of advertising a product to someone who isn’t in the target audience, or of prescribing medication to someone who is outside the demographic for which it has been proven safe and effective?

[Edited by moderator because original examples used were inadvertently tendentious]

RichR

A list of hashtag options with the “preferred option” first or easier to enter.

Or, the “preferred party” being listed first on ballot papers to very subtly gather in any uncertain voters?

Michal Smrž

2 + 2 = 5 because we need it to be five at the social/political moment? Where have I heard that before?

Algorithm couldn’t be ethical or unethical, same as knife is not responsible for cutting onion or children. It’s always… the user.

Pat Senn

An algorithm itself might not be unethical, but it’s creator can definitely add bias, either by the way it determines its path or by the data-set it relies on for information. Think about doing a survey regarding foxes or weasels are worse in the hen house, and only questioning people in the city.

Fritz

I earn my living writing algorithms that help making decisions, mostly for logistics-problems. I love the fact that my programs can help reduce pollution and stress for planners, while at the same time making better plans (like with punctual arrivals, or with drivers who already know their customers). Although the methods of these algorithms are basically as simple as bouncing a ball in an egg-carton, I am painfully aware that it is very difficult to explain what is going on inside, or to convey the dire consequences of lousy input-data.

It makes me sad to read articles about “algorithms and ethics” because I feel that often they quickly create a strong association in me as the reader: Between my unease towards the invisible and complicated on the one hand (“algorithm” could be replaced by “radiation” or “chemical” in this role), and ruthless profit-seeking, arrogant carelessness and other unethical behaviour on the other (think “Cambridge Analytica”, “Chernobyl”, “global warming”). I wish there was a better way to distinguish between technology as “simply a tool”, and the conundrums we run into when it is used “system-wide” – by lots of people, under all sorts of circumstances, for many goals… Inevitably it will then often end up being used carelessly or with evil intent – and yet possibly affect us all!

Raspberry Pi Staff Janina Ander

I think you’re raising a good point, Fritz. I used to be a geneticist, and I see this ‘unease towards the invisible and complicated’ you’re mentioning in the people who are worried about genes in their tomatoes because they’ve heard a lot of bad things about GM crops.

It’s very important to make sure we have nuanced conversations about technology instead of black-and-white ones, because anything that creates the association “technology X = unethical” or “technology Y = ethical” is unproductive. Nevertheless, it’s equally unproductive to not think/talk about these topics at all. That’s why we’ve tried to make this post a conversation starter and not just an opinion piece. People need to make up their own minds about the questions involved, and I think there aren’t any easy, across-the-board answers to any of them.

Fritz

Thank you for your reply, Janina, and thank you very much for the invitation to lead a nuanced conversation about the difficult and controversial questions posed by these new technologies! Still, the longer I think about it, the more I feel that asking about “the ethics of algorithms” is as unhelpful as it would be to ask about “the beauty of paint” (not paintings). Even if we limit the discussion to decision-making algorithms: True, good ones have values baked into them (e.g. the price of carbon emissions relative to the cost of labour), but the ethical questions are outside: Do we have enough reliable data and understanding to justify developing the algorithm? When should we use it? And should we follow its advice? (Btw., if in doubt: Toss a coin! :-)

somebodywhoisntme

Your algorithms doesn’t do it because of ethical reasons. It’s only about time and money. If it would act ethically it would route everything over trains which would be environment friendlier but it’s logistics and as I said there only counts time and money.

Robert Alderton

Given ethics are generally in the eye of the beholder, it’s probably not possible to define an algorithm as either ethical or not, accurate or inaccurate is the better description. However, whether those same algorithms are used ethically is probably the thing to debate. The Cambridge Analytica scandal is probably the poster child example in recent times. The targeting algorithms used were obviously accurate. However, the manipulation of people using those algorithms was of questionable ethics.

Raspberry Pi Staff Mac — post author

I agree Robert, and I like the idea of accurate vs inaccurate. Especially for those who do not know as much about how an algorithm works, this is a great starting point. The consideration can be extended to think about whether an inaccurate algorithm would impact people and what the ethics of that are. If a small inaccuracy can lead to large impacts, maybe that isn’t an area where an algorithm should be used. On the other hand, humans can also be inaccurate so perhaps an algorithm would help. As you mentioned the same can happen for accurate algorithms – I think it is a great starting place to begin discussing an algorithm.

Simon Lambourn

Another example which troubles me is the use of algorithms in recruitment. Nowadays, applying for a job can involve many stages with the first stages involving questionnaires or machine scoring of a Curriculum Vitae – you don’t even come into contact with a human until the later stages. There is no way to know if you have been selected or rejected ‘fairly’, and whether certain sections of society are disadvantaged by such a process.

Starbeamrainbowlabs

Absolutely. Personally I’m not sure whether all applicants are actually given a fair chance. For example, someone with dyslexia might be a brilliant programmer, but accidentally make a few spelling errors in their cv.

Raspberry Pi Staff Liz Upton

I do a lot of hiring here at Raspberry Pi – and I’d never DREAM of using an automated filter for applications for the reasons you mention! No applicant is ever going to tick all the boxes, some have unusual but perfectly valid backgrounds, and some of these filters are totally opaque; in the interests of getting great applicants we’re more than ready to hand-screen everything we get here rather than subbing out to an algorithm that’s going to lack the flexibility of a human.

somebodywhoisntme

How could someone discuss about the „ethics“ of algorithms??

In my language ethic and morality are different pairs of shoes but it seemed in englisch it’s the same but anyways.

To be able to act ethically it must exist an Individuum which is aware of its own self, have some kind of senses to interact with the environment and than it should be able to have a own option about good and bad behavior so it can categorize the informations it’s senses take up in good/bad/neutral or act accordingly.

Pls show me one algorithm which is aware of this??♂️

„Morality is just a fiction used by the herd of inferior human beings to hold back the few superior men.”

*Friedrich Nietzsche

Also maybe you should read his book „Beyond good and evil“.

Computers do logical work and if there are good algorithms they’ll be used for decades over the whole planet and outside so why mess this up with illogical human behavior. Ethics change from decade to decade, even from place to place so it’s not a constant humanity should rely on.

Jacek Q.

Imagine that you are an AI algorithm of a self-driving car and you have to make hard decision – what do you choose?

Look at this site http://moralmachine.mit.edu/ and try to “solve” test.

Viktor

“Are the data sets [algorithms] use to make these decisions ethical and reliable?”

Even if they were, there is something else about them: data comes necessarily from the past. Pretending to predict the future, “machine learning” algorithms will instead re-mix the past for you. They are conservative by nature.

Cory Doctorow makes this point, great as always:

http://blog.lareviewofbooks.org/provocations/neophobic-conservative-ai-overlords-want-everything-stay/

Gavin McIntosh

“2 + 2 = 5 because we need it to be five at the social/political moment? Where have I heard that before?”

Akkad Daily?

AI ethics is very interesting because to under stand “ethics” programming, we need to understand human ethics. We sort of know instinctively but how do to get enough detail to code for it? Will AI’s need to learn ethics? Can people learn ethics. Those old Greek guys say “virtue” must be practiced. But could it lead to an artificial conscious that everyone will have monitoring them? An Ethics app? What is the Ethics Turing test and how many Politicians could pass it?

heater

I don’t want to talk about ethics and morals much. Theologians, philosophers and others have been arguing about that for centuries, with no definitive conclusion yet.

However I would like to comment on the questions posted in this blog post in a very concrete and practical way.

– What algorithms do you interact with every day?

I have no idea. How can I know? Of course my computer is teeming with algorithms, for all kind of things in the operating system and applications. Everything from Euclid’s two thousand year old GCD to the Cooley–Tukey FFT algorithm and Tony Hoare’s Quick Sort of the 1960’s. And many more besides.

Of course as soon as I hit the net I’m dealing with Google’s Page Rank algorithm and all kind of AI/deep learning etc that I have no idea about.

– How large are the decisions you allow algorithms to make?

This is a dumb question. I have no control over the size of decisions those algorithms make. Out there on the net, used by other people to make decisions about my life. For example:

My bank may decide to loan me money or not. Depending on what their algorithms tell them about my financial history and whatever else they can find.

Insurance companies will adjust my insurance rates depending on what their algorithms tell them about my medical history, my driving record and whatever else they can find.

Companies may decide to employ me or not. Depending on what their algorithms tell them about me.

And so on, and so on, more and more everyday.

– Are there algorithms you absolutely do not trust?

See above. I can trust the likes of Euclid’s GCD and Tony Hoare’s Quick Sort. I cannot trust anything I do not know even exists or where it is or who is using it or how it works.

– What do you think would happen if we let algorithms decide everything?

Interesting question. We seem to be performing the experiment to find out as we speak. On a global basis.

TL;DR

Algorithms do not have ethics or morals. The people who use them as tools to get what they want done might have (Although the latter is not always clear to me)

Gavin McIntosh

Can algorithms be unethical?

Depends if they are programmed that way.

And who is to judge what is ethical?

An example is an autonomous vehicle, does it kill the driver to avoid school kids?

Research is coming in that shows people trust autonomous vehicles more than people driving. Do these people with trust believe the car is more ethical or just better at predicting and reacting as well as drive “safer”?

Can unethical algorithm be taught to be ethical?

If it passes an Ethical Turing test, is it ethical or just pretending to be ethical?

Here in Australia we have Robo-Debt, a computer algorithm that predicted tax debts based on income averaging even on dead people. Was this ethical or just bad programming? There is an indication more 150,000 people paid debts they might not have owed. Here the question becomes, do we trust government? GiGo, garbage in, garbage out. Ethical behavior is what people can trust and believe in. Is that the test?

Andrew

The trouble with ethical discussions is they include distinctions between intent, purpose and outcomes, all of which may be derailed by circumstances. Thus an ax is an ax (existentially Neutral); it might be used to injure someone (Bad) or free a trapped person (Good). Alternatively, the existence may be neutral, the intent may be good, the outcome bad.

Algorithms which do harm to (hostile) individuals in our defence are arguably good; the intent is harm (bad) but the outcome is (good).

As the lawyers have it; Circumstances alter cases. There are no hard ethical rules for algorithms, just circumstances. Good judgment is needed when deploying them.

Tally ho! ;-)

Roman Miller

Ethics in algorithm is a new thing to me because I never thought about it ever. I interact daily with different algorithms but there is one algorithm which I think has a ethical problem and it need to be fixed. When I enter my university, there is a walkthorugh barrier on which we scan our entry card and it opens for 3 seconds. Now, if someone is equipped with different things or someone elder could not pass it in 3 seconds, the alarm/siren starts and it gives embarrasment to the trespasser. So, this should be dealt.

Delubio L de Paula

The worst of them all. Twitter, Facebook and Google Search Engine. These companies are destroying countries all over the world with their evil agenda.

And I mean it, they’re evil to the core.

I can rest assure, they are meddling with elections from USA to Brazil.

We are experiencing a shift towards Conservatism all over the world, and these people are shutting down the voices of conservatives. Progressives run these companies, all their algorithms are made by progressive minds, and the result is a disaster.

Here in Brazil, our President Jair Bolsonaro was elected with almost 60% of the votes last election, and he only used U$1.8 million dollars for his campaign.

That’s how bad people want conservatism back in our lives.

But we’re being shut on those platforms, all because of algorithms.