The challenges of measuring AI literacy

Measuring student understanding in computing education is not an easy task. As AI literacy becomes an important pillar in computing education, defining and accurately measuring students’ understanding of concepts and their skills is an even greater challenge.

In a recent seminar in our series on teaching about AI and data science, researcher Jesús Moreno-León (Universidad de Sevilla) talked about his work in developing assessment tools for computational thinking (CT) and AI literacy. Jesús is also co-founder of Programamos, a non-profit organisation that promotes the development of computational thinking, supporting teachers through training and sharing resources.

Developing assessment tools in computer science

Jesús began by discussing the recent development of computer science assessment tools. Together with Gregorio Robles (Universidad Rey Juan Carlos), they created Dr Scratch, a web-based tool to assess the quality of Scratch projects and detect errors and bad programming habits (e.g. dead code). Projects are scored on the use of computational thinking concepts (e.g. parallelism, conditional logic) and the use of desirable programming practices (e.g. naming sprites, removing duplicate scripts) in order to give feedback to students and teachers to iteratively improve their Scratch projects.

Alongside measuring students’ programming skills, Jesús also shared work by Marcos Román-González (Universidad Nacional de Educación a Distancia) to develop the Computational Thinking test (CTt), a 28-item assessment tool designed to measure the computational thinking skills of students aged 10 to 16 years old. Two collaborators, María Zapata and Estafanía Martín (Universidad Rey Juan Carlos) further adapted these items to create the Beginners Computational Thinking test (or BCTt), an unplugged assessment suitable for younger learners aged 5 to 10 years old.

Teaching about AI in Spain

Jesús also described his more recent work at the Ministry of Education and Vocational Training in Spain to promote computer science at all educational levels. One initiative, La Escuela de Pensamiento Computacional e Inteligencia Artificial (or the School of Computational Thinking and Artificial Intelligence), supported Spanish teachers through training and resources to introduce CT and AI into the classroom. Over 400 teachers and 7000 teachers took part across Spain through unplugged activities and tools such as Machine Learning for Kids and LearningML, allowing students to classify text and images using machine learning. Older students created apps using the MIT App Inventor. When evaluating the design of the curriculum, they found they had strong instruments to measure the development of CT — such as the assessment tools described above — yet nothing to measure AI literacy.

A tool for measuring AI literacy

The lack of valid AI literacy assessment tools led the team to develop the AI Knowledge Test (or AIKT), a 14-item survey consisting of multiple-choice questions designed to measure students’ understanding of AI. The instrument was inspired by previous work in the field and relevant research (e.g. the AI4K12 framework).

An example from the AI Knowledge Test

An example of one of these items is presented below. Can you solve it? The answer is at the bottom of this article.

Q1. Which of the following strategies would be most appropriate for teaching a computer to recognise photos of apples?

- Train the computer with photos of dogs

- Train the computer with several photos of different apples, taken in different places and contexts

- Train the computer with several similar photos of the same apple, taken in the same place

- Train the computer with several identical copies of the same photo of an apple

Testing the test

In a study on the impact of programming activities on computational thinking and AI literacy in Spanish schools, the authors tested these knowledge-based items with over 2000 students to assess the reliability (e.g. internal consistency), or a measure of the quality of a survey or test. They found one item (“As a user, the legal regulation that is approved regarding AI systems will affect my life”) did not correlate with the other items. This left a total of 13 items which were found to have sufficient internal consistency — meaning how well each item correlated with one another to measure an underlying construct (i.e. “AI knowledge”). They concluded that the assessment tool needed a higher ceiling and needed to address common misconceptions. The authors also learned that teachers needed free and open-source tools with low barriers for entry, such as not needing registration, and were suitable for classroom use, such as limiting data sent to the cloud.

AI literacy in the generative era

With the rise of generative AI tools like ChatGPT or Google’s Gemini, Jesús and his colleagues felt their AI literacy assessment tool needed to focus on the capabilities of generative AI tools. They also felt they needed to take a broader view of AI and focus on additional dimensions, such as the social and ethical implications of AI tools. They are, therefore, currently revising their assessment items to align with several common frameworks, including the SEAME framework and AI Learning Priorities for All K–12 Students.

An example from the revised AI Knowledge Test

One of the revised items is presented below. Can you solve it? The answer is revealed below.

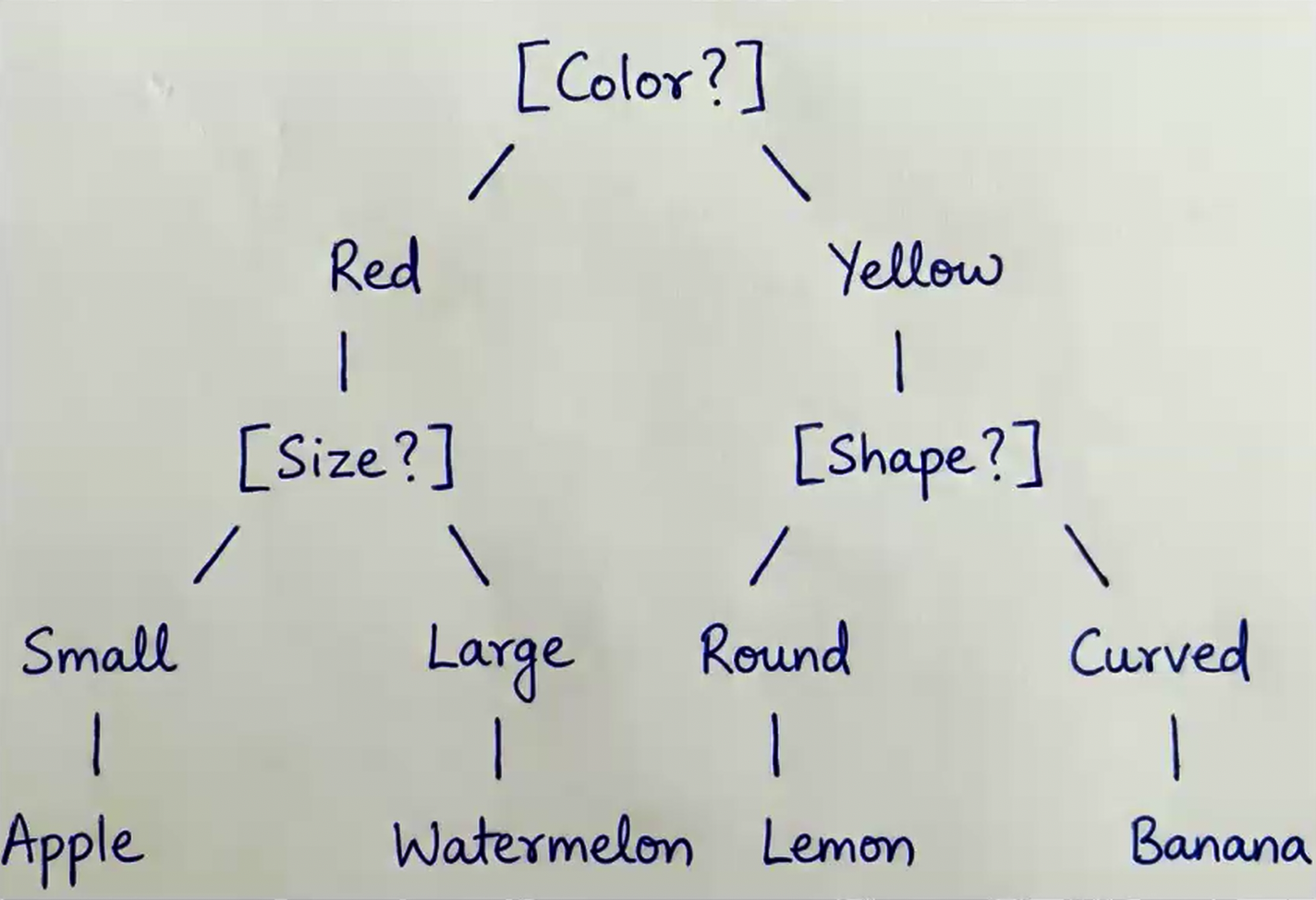

Q2. You have asked your students to design a decision tree to classify different fruits based on three characteristics: color, size, and shape. To check whether the following proposed solution is correct, you are going to test it with a small, round, yellow apple.

- Apple

- Watermelon

- Lemon

- Banana

Learn more about this work

Jesús concluded the seminar by describing his intentions to collaborate with others to test the revised AI literacy instrument with students in early 2026. We look forward to hearing about their results!

You can watch Jesús’s whole seminar here:

If you are interested to learn more about Jesús and his work, you can read about his development of the AI Knowledge Test (or AIKT) here and the Computational Thinking test (CTt) here or look at the original items here. You can also learn about the Beginners Computational Thinking test (BCTt) by watching a Raspberry Pi research seminar about it or reading about it here.

Join our next seminar

In our current seminar series, we’re exploring applied AI and how AI can be taught across the curriculum. In our next seminar in this series on 17 March at 17.00 UK time, we welcome Rebecca Fiebrink (University of the Arts London) who will explore the questions of how and why we might teach AI for creative practitioners, including children, students, and professionals.

To take part in the seminar, click the button below to register. We hope to see you there.

The schedule of our upcoming seminars is available online. You can catch up on past seminars on our blog and on the previous seminars and recordings page.

Answers

- Q1: 2

- Q2: 3

No comments

Jump to the comment form